As artificial intelligence (AI) continues to revolutionize healthcare in Australia, the role of legal frameworks becomes increasingly pivotal. For the Australian Institute of Health Technology (AIHT), which specializes in strategic consultancy for AI governance and risk management, understanding how law shapes AI adoption is essential. Legal regulations ensure that AI tools enhance patient safety, equity, and efficiency while mitigating risks such as bias, privacy breaches, and liability issues. In 2025, Australia’s approach remains risk-based and technology-neutral, relying on existing laws rather than new comprehensive AI legislation. This blog post examines the current legal landscape, key regulators, privacy considerations, liability challenges, international influences, and future directions for AI in Australian healthcare.

Mapping the regulatory landscape for artificial intelligence in …

Australia’s Risk-Based Regulatory Approach

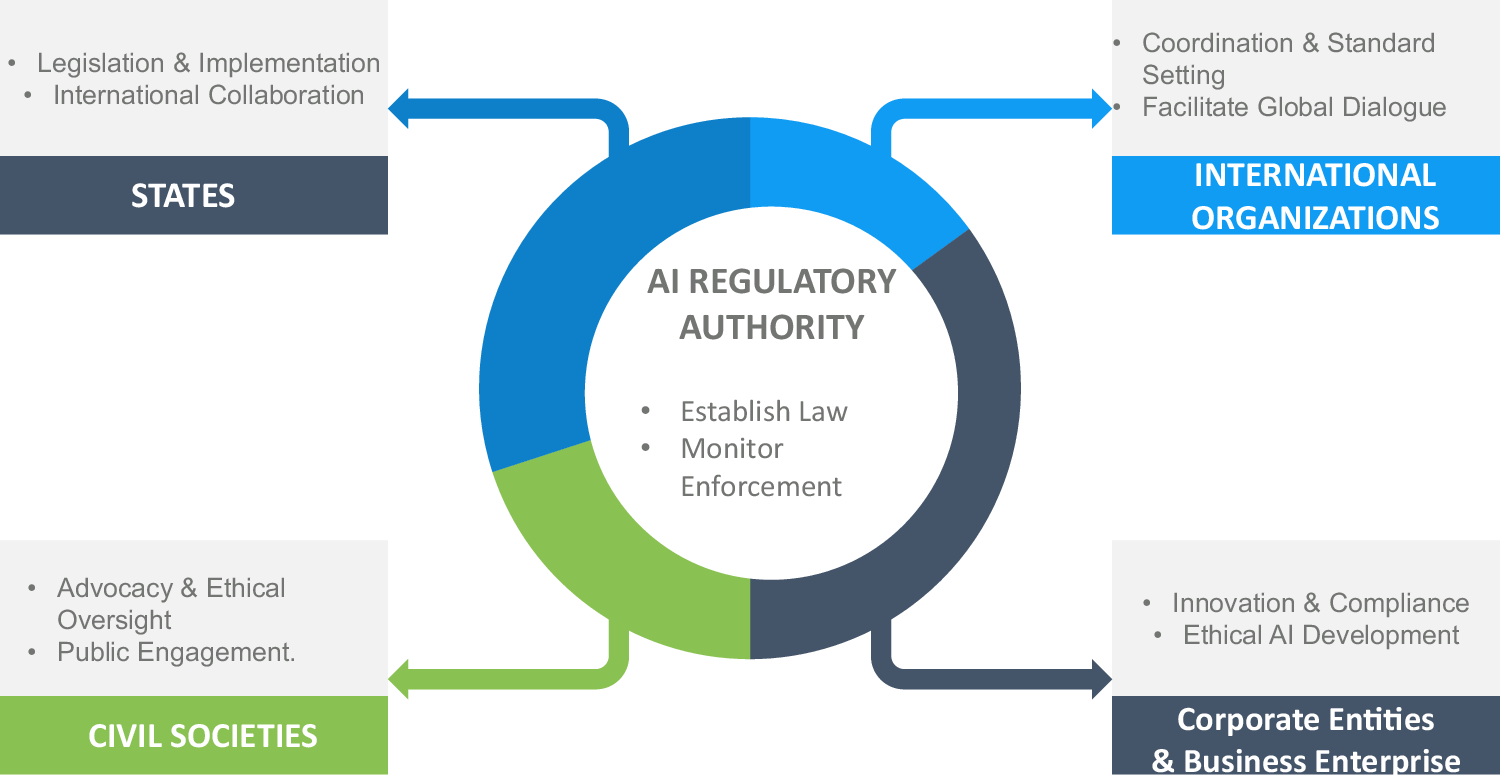

Australia adopts a pragmatic, risk-proportionate strategy for AI regulation, avoiding a standalone AI Act in favor of strengthening existing laws. The National AI Plan, released in December 2025, emphasizes reliance on sector-specific regulators like the Therapeutic Goods Administration (TGA) for healthcare, supported by voluntary standards and a new AI Safety Institute from 2026.

This approach aligns with global trends but prioritizes innovation while addressing harms. In healthcare, AI applications range from diagnostic tools to administrative scribes, and the law distinguishes between high-risk medical devices and lower-risk tools.

The TGA regulates AI as a medical device if it serves purposes like diagnosis, prevention, monitoring, or treatment. Reforms since 2021 classify software as a medical device (SaMD), requiring inclusion in the Australian Register of Therapeutic Goods (ARTG) for higher-risk systems.

TGA – Therapeutic Goods Administration | healthdirect

In 2025, the TGA published the “Clarifying and Strengthening the Regulation of Medical Device Software including Artificial Intelligence” report following consultations. It confirms the framework is largely fit-for-purpose but recommends enhancements for transparency, labelling, and post-market monitoring of adaptive AI.

Key Legal Frameworks and Regulators

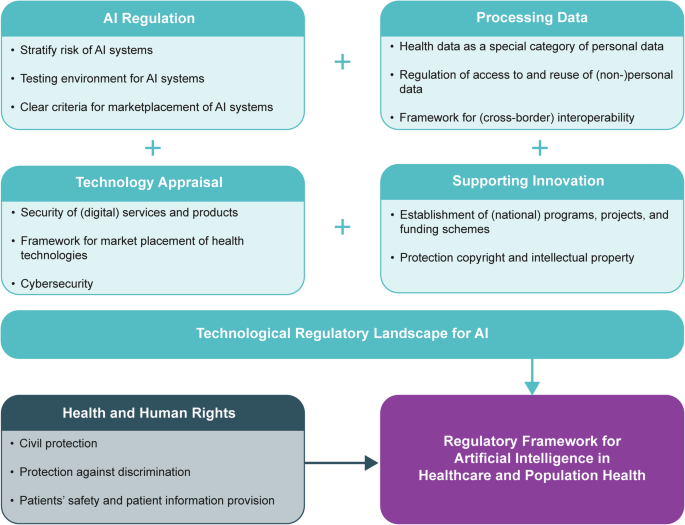

Several laws and bodies govern AI in healthcare:

- Therapeutic Goods Act 1989 and TGA: Core regulation for AI as SaMD. Evidence requirements include risk management for bias, data drift, and generalizability to Australian populations. The TGA maintains a public list of AI-enabled devices in the ARTG and focuses on compliance, particularly for digital scribes.

- Privacy Act 1988 and Australian Privacy Principles (APPs): Administered by the Office of the Australian Information Commissioner (OAIC), these protect personal health information used in AI training or deployment. Guidance emphasizes privacy by design, consent for secondary uses, and de-identification where possible.

- Australian Health Practitioner Regulation Agency (AHPRA): Guidelines require practitioners to remain accountable when using AI, ensuring human oversight and informed patient consent.

- Australian Commission on Safety and Quality in Health Care: Provides implementation principles and guides for safe AI use in hospitals, focusing on evidence of quality and safety.

The Department of Health and Aged Care’s 2025 “Safe and Responsible Artificial Intelligence in Health Care” review assesses broader impacts, recommending targeted amendments rather than sweeping changes.

Frontiers | Legal and Ethical Consideration in Artificial …

Privacy and Data Protection Challenges

Health data’s sensitivity amplifies privacy risks in AI. The Privacy Act applies to all personal information handling, including AI training. OAIC guidance stresses transparency, impact assessments, and avoiding unauthorized secondary uses.

Challenges include de-identification difficulties and offshore data storage in commercial AI tools. Recent cases highlight scrutiny over data use for AI training without clear consent.

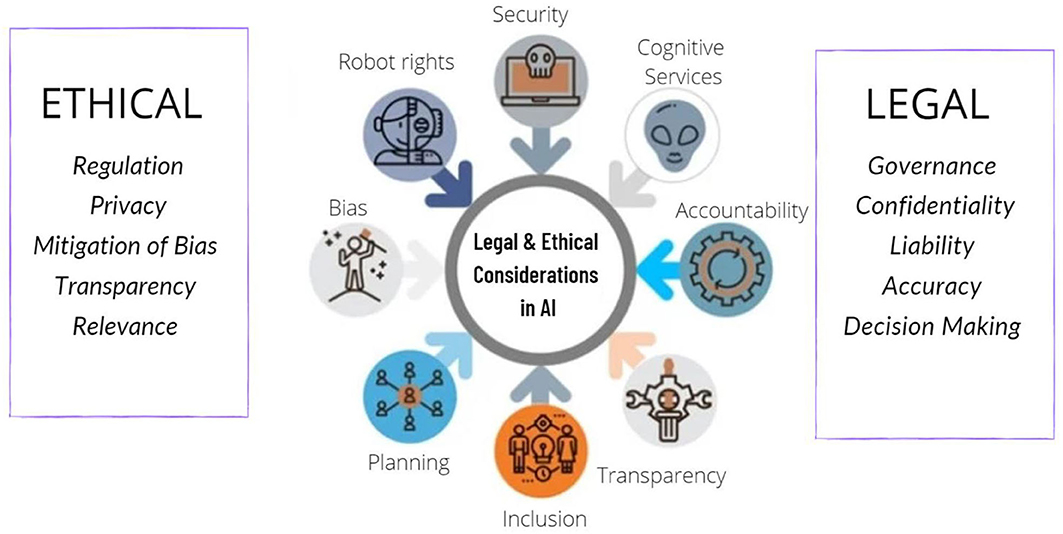

Liability and Ethical Implications

Law shapes accountability: Practitioners retain responsibility under professional standards, while manufacturers face product liability. Causation in AI-related harms remains complex due to “black-box” issues.

Ethical concerns like bias and equity are addressed through existing discrimination and consumer laws, with calls for clearer liability frameworks.

International Influences and Comparisons

Australia draws inspiration from the EU AI Act’s risk-based classification, particularly for high-risk healthcare AI. While not adopting it directly, alignments in transparency and conformity assessments are evident. Global standards from the International Medical Device Regulators Forum influence TGA guidelines.

Challenges and Future Directions

Gaps persist in regulating non-SaMD AI (e.g., administrative tools) and emerging generative AI. The National AI Plan pauses mandatory guardrails, favoring sector-led approaches.

Future steps include targeted consultations in 2026, enhanced education, and potential privacy law reforms. AIHT supports healthcare leaders in navigating these through governance consultancy.

AI Governance in a Complex and Rapidly Changing Regulatory …

Conclusion

Law plays a crucial role in shaping safe, responsible AI in Australian healthcare, balancing innovation with protection. As of December 2025, the framework prioritizes existing regulations with incremental enhancements. Ongoing collaboration among regulators, industry, and experts like AIHT will ensure AI delivers equitable benefits.

References

- Safe and Responsible Artificial Intelligence in Health Care – Legislation and Regulation Review Final Report (2025). Department of Health and Aged Care. https://www.health.gov.au/sites/default/files/2025-07/safe-and-responsible-artificial-intelligence-in-health-care-legislation-and-regulation-review-final-report.pdf

- Clarifying and Strengthening the Regulation of Medical Device Software including Artificial Intelligence (2025). Therapeutic Goods Administration. https://www.tga.gov.au/resources/publication/publications/clarifying-and-strengthening-regulation-medical-device-software-including-artificial-intelligence-ai

- National AI Plan (2025). Department of Industry, Science and Resources. https://www.industry.gov.au/publications/national-ai-plan

- Digital Health Laws and Regulations Report 2025 Australia. ICLG. https://iclg.com/practice-areas/digital-health-laws-and-regulations/australia

- Healthcare AI 2025 – Australia. Chambers and Partners. https://practiceguides.chambers.com/practice-guides/healthcare-ai-2025/australia/trends-and-developments

- Guidance on privacy and the use of commercially available AI products (2025). OAIC. https://www.oaic.gov.au/privacy/privacy-guidance-for-organisations-and-government-agencies/guidance-on-privacy-and-the-use-of-commercially-available-ai-products

- Meeting your professional obligations when using Artificial Intelligence in healthcare. AHPRA. https://www.ahpra.gov.au/Resources/Artificial-Intelligence-in-healthcare.aspx

Leave a Reply